debian

free open source software

linux

management

memory

open source

open source operating system

ubuntu

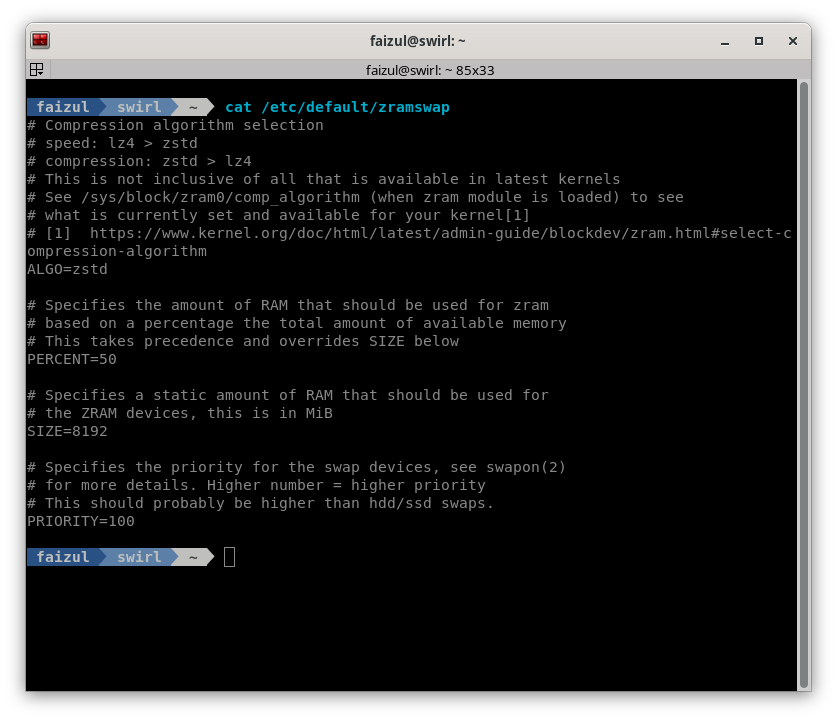

algorithm comparison, compression ratio, computational resources, cpu overhead, data compression, deflate, embedded systems, latency reduction, linux kernel, LZ4, lzo, memory compression, memory fragmentation, memory management, memory savings, performance tuning, ram efficiency, storage optimization, swap optimization, system responsiveness, virtual machines, zram, zram configuration, zstd

9M2PJU

0 Comments

The Role of Compression Algorithms in ZRAM

ZRAM relies on compression algorithms to reduce the size of data stored in memory. These algorithms take raw data, analyze patterns within it, and encode it in a more compact form. The compressed data is then stored in a portion of RAM designated as a “compressed block device.” When the system needs to access the data, it decompresses it back to its original form.

The choice of compression algorithm directly impacts:

- Compression Ratio: How much the data is reduced in size.

- CPU Overhead: The computational resources required to compress and decompress data.

- Latency: The time taken to compress and decompress data, which affects system responsiveness.

- Memory Fragmentation: How efficiently the compressed data is stored in memory.

Now, let’s explore the most commonly used compression algorithms in ZRAM in detail.

1. LZ4

Overview

- Type: Fast compression algorithm.

- Developer: Yann Collet.

- Characteristics: LZ4 prioritizes speed over compression ratio. It achieves moderate compression ratios but excels in low-latency compression and decompression.

Key Features

- Speed: LZ4 is one of the fastest compression algorithms available. Its design minimizes CPU overhead, making it ideal for systems where performance is critical.

- Compression Ratio: Moderate (typically around 2:1 for general-purpose data).

- Use Case: Best suited for systems with limited CPU power or workloads that require fast access to compressed data, such as real-time applications or embedded systems.

Example Use Case

- A Raspberry Pi running a lightweight desktop environment can benefit from LZ4 because it reduces memory usage without significantly taxing the CPU.

Pros

- Extremely fast compression and decompression.

- Low CPU overhead.

- Predictable performance.

Cons

- Lower compression ratios compared to other algorithms like ZSTD.

2. ZSTD (Zstandard)

Overview

- Type: High-performance compression algorithm.

- Developer: Facebook (now Meta).

- Characteristics: ZSTD offers a wide range of compression levels, allowing users to balance between speed and compression ratio. At higher levels, it achieves excellent compression ratios but at the cost of increased CPU usage.

Key Features

- Compression Ratio: Excellent (up to 3:1 or higher for general-purpose data).

- Speed: Configurable. At lower levels, ZSTD is comparable to LZ4 in terms of speed. At higher levels, it sacrifices speed for better compression.

- Flexibility: ZSTD supports multiple compression levels (from 1 to 22), giving users fine-grained control over performance and efficiency.

Example Use Case

- A server running memory-intensive applications (e.g., databases or virtual machines) can use ZSTD at a moderate compression level to maximize memory savings without overwhelming the CPU.

Pros

- High compression ratios at higher levels.

- Configurable trade-off between speed and compression.

- Modern and actively maintained.

Cons

- Higher CPU overhead at higher compression levels.

- May not be suitable for systems with weak CPUs.

3. LZO (Lempel-Ziv-Oberhumer)

Overview

- Type: Fast compression algorithm.

- Developer: Markus Oberhumer.

- Characteristics: LZO is similar to LZ4 in that it prioritizes speed over compression ratio. However, it is slightly less efficient than LZ4 in terms of both speed and compression.

Key Features

- Speed: Very fast, though not as fast as LZ4.

- Compression Ratio: Moderate (similar to LZ4).

- Use Case: Suitable for legacy systems or environments where LZ4 is not available.

Example Use Case

- An older Linux distribution that does not support LZ4 might use LZO as a fallback option.

Pros

- Simple and reliable.

- Low CPU overhead.

Cons

- Slightly inferior to LZ4 in terms of performance and compression ratio.

- Less commonly used today due to the availability of better alternatives.

4. Deflate (via zlib)

Overview

- Type: General-purpose compression algorithm.

- Developer: Jean-loup Gailly and Mark Adler.

- Characteristics: Deflate is the algorithm used by gzip and zlib. It provides good compression ratios but is slower than LZ4 and LZO.

Key Features

- Compression Ratio: Good (better than LZ4 and LZO but worse than ZSTD).

- Speed: Slower than LZ4 and LZO, especially during decompression.

- Use Case: Rarely used in ZRAM due to its higher CPU overhead compared to modern alternatives.

Example Use Case

- Systems with abundant CPU resources and a need for better compression than LZ4 or LZO might consider Deflate.

Pros

- Well-established and widely supported.

- Good compression ratios.

Cons

- Higher CPU overhead.

- Slower than LZ4 and LZO.

5. LZ77 and LZ78 Variants

Overview

- Type: Foundational compression algorithms.

- Developer: Abraham Lempel and Jacob Ziv.

- Characteristics: LZ77 and LZ78 are the basis for many modern compression algorithms, including LZ4, ZSTD, and Deflate. They identify repeated patterns in data and replace them with references to earlier occurrences.

Key Features

- Compression Ratio: Varies depending on the implementation.

- Speed: Generally slower than specialized algorithms like LZ4.

- Use Case: Rarely used directly in ZRAM but forms the foundation for many other algorithms.

Example Use Case

- Research or custom implementations may experiment with LZ77/LZ78 variants.

Pros

- Fundamental to understanding compression theory.

- Flexible and adaptable.

Cons

- Not optimized for modern hardware.

- Outperformed by newer algorithms.

Choosing the Right Algorithm for Your Use Case

The choice of compression algorithm depends on your system’s hardware capabilities and workload requirements. Here are some guidelines:

For Speed-Critical Applications:

- Use LZ4 if you need minimal CPU overhead and fast compression/decompression.

- Avoid algorithms like ZSTD at high compression levels.

For Memory-Constrained Systems:

- Use ZSTD at moderate compression levels to achieve a balance between memory savings and CPU usage.

- Consider LZ4 if CPU resources are extremely limited.

For Legacy Systems:

- Use LZO or Deflate if newer algorithms like LZ4 or ZSTD are unavailable.

For Servers with High Memory Pressure:

- Use ZSTD at higher compression levels to maximize memory savings, provided the server has sufficient CPU resources.

Conclusion

The choice of compression algorithm is a critical factor in optimizing ZRAM’s performance. Each algorithm has its strengths and weaknesses, and the best choice depends on your specific use case. For most users, LZ4 strikes an excellent balance between speed and efficiency, while ZSTD offers superior compression ratios for systems with sufficient CPU resources.

By understanding the characteristics of these algorithms and experimenting with different configurations, you can tailor ZRAM to meet the unique demands of your system. Whether you’re managing a memory-constrained embedded device or a high-performance server, ZRAM’s flexibility ensures that you can find a solution that works for you.

Post Comment